Why IL(Imitation Learning)?

- 앞서 배운 강화학습 알고리즘 중, DQN은 다음과 같은 문제를 가지고 있습니다.

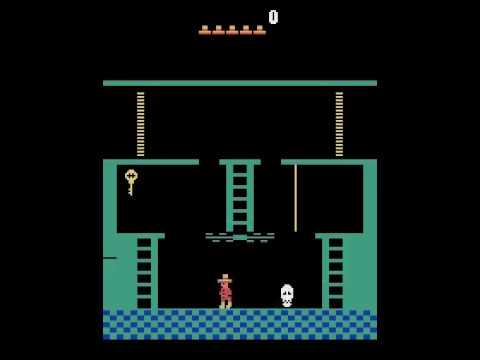

- 한 픽셀이 여러번 바뀌는 (게임 상에서 공간이 계속 변화하는) Mobtezuma 게임같은 경우는 기존 DQN에서의 탐색이 어렵습니다.

- Result

왼쪽의 DQN으로는 2개의 공간만을 탐색했고, 오른쪽의 향상된 DQN으로도 모든 시나리오를 얻지 못했습니다.

- Mobtezuma’s Revenge 게임

- https://www.youtube.com/watch?v=JR6wmLaYuu4

- 8초 정도를 보면, 모든 픽셀이 바뀌며 캐릭터가 있는 공간이 변화하게 됩니다.

- 즉, 강화학습의 시선으로 볼 때, 어떠한 Action을 하면 그 이후 모든 State가 변화하는 상황

- 해결방안

- 전문가가 겪은 경험을 통해 학습하자! (Imitation Learning)

- 보상을 받는 시간이 길거나, 보상이 모호할 경우, 원하는 정책을 직접 코딩하기 어려울 경우 유용합니다.

- 그래서 Imitation Learning은 어떻게 하는데?

- reward를 demonstration하는 방법으로 실제로 어떻게 하는지 보여주면서 reward를 implicit하게 주게 됩니다.

- 예를 들어, 자율주행 자동차를 만들기 위해서는 숙련된 운전자가 직접 운전을 하면서 State와 Action의 시퀀스들을 전달하고, Agent는 이를 바탕으로 학습합니다.

- 하지만, 이러한 방식은 reward를 하나 하나 부여하거나, 특정한 policy를 따르도록 하게 하는 경우에 비효율적입니다.

- DQN과 마찬가지로 State와 Action, Transition model이 주어지지만, reward function R은 주어지지 않습니다. 대신 (s0,a0,s1,a1,…)과 같은 demonstration이 주어집니다.

Behavioral Cloning

- Supervised learning을 통해 전문가의 policy를 직접 배울 수 있게 하자! (Like Machine Learning)

- Policy의 클래스를 설정합니다. (ex. neural network, decision tree, …)

- expert의 state를 supervised learning model의 input, expert의 action을 supervised learning model의 output으로 두고 Agent를 학습시킵니다.

- 문제점

- Compounding Error

- 대부분의 Machine Learning은 데이터의 iid(동일하고 독립적인 분포에서 생성됨)을 가정합니다.

- 하지만 강화학습에서는 대부분 데이터는 독립성을 보장할 수 없습니다. (시간 흐름에 따른 데이터가 대부분이기 때문!)

- 따라서 Machine Learning 기반의 강화학습은 현재 어떤 state인지가 중요하지 않고, 특정 state에서는 특정 action을 취하길 기대합니다.

- 예시) 원형 트랙 내 자율주행 자동차

- 파란색의 전문가가 운전한 경로를 학습하게 되는데, 초반에 조금 더 밖으로 운행하는 약간의 error가 발생하였습니다.

- 하지만, Agent가 현재의 자동차의 위치가 밖으로 나와있다는 것을 고려하지 않은 채로 expert 데이터에 따라 특정 구간에서 코너링을 진행하면 사고가 나게 됩니다.

- 즉, time step t에서 아주 작은 실수로 인해 그 이후의 time step t+1, t+2, … 에서도 계속 오차가 생겨 결국은 학습에 실패하게 됩니다.

- 해결책 (DAGGER : Dataset Aggregation)

- 잘못된 길을 가면 expert에게 어떤 action을 취해야하는지 알려줘!!라고 물어보는 방식

- 하지만, 이 방법은 매우 제한적인 상황에서만 가능합니다.

Inverse Reinforcement Learning

- Expert의 policy를 보고 reward function을 찾아나가는 방식입니다.

- Imitation Learning은 reward function R을 input으로 받지 않고, demonstration (s0,a0,s1,a1,…)시퀀스를 받게 되기 때문에 이를 통해 reward를 알아가게 됩니다.

- 단, expert의 policy가 optimal하다는 전제를 하고 이 방법을 사용합니다.

- 문제점

- 추정되는 reward function은 여러개가 있을 수 있음.

- 해결책 : Linear value function approximation

- R값을 W^t X(s)라고 정의하는데, w는 weight vector이고, x(s)는 state의 feature를 의미합니다. 여기서 weight vector w를 주어진 demonstration을 통해 찾아내는 것이 목표입니다.

- 즉, 우리가 학습시킨 weight vector w값에다가 자주 등장하는 state feature의 값을 곱해준 것으로 해석할 수 있습니다. → 단, 우리는 expert의 policy를 optimal로 전제했기 때문에 자주 보이는 state feature를 갖는 state의 reward는 높게 됩니다.

Apprenticeship Learning

- 위의 Inverse RL과 비슷한 방향

- 추가적인 것은, 마지막 6번 수식입니다.

- : expert가 주는 optimal 한 policy → 우리가 이미 알고 있는 값

- : expert가 주는 policy를 제외한 다른 policy

- 와 의 차이가 작은 를 찾고, 와 값의 차이가 작은 w를 구해야합니다.

- 이러한 방식으로 reward function에 관계없이, 충분히 optimal policy에 가까운 policy를 얻어낼 수 있습니다.